Time and lineage in text analysis

I've been working on a few parallel projects that involve topic modeling abstracts of managment research publications (See Topics in Management Research, for example). My method of choice at the moment is Latent Dirichlet Allocation.

I'm finding that this class of methods is useful in ways I hadn't quite expected when I started this exercise about a year ago in order to be able to test some hypotheses about management research in a causal inference/regression context. Since then I have been exploring the use of topic modeling for qualitative analysis. This sort of use of topic modeling is well represented in modern management research (See Hannigan et al., 2019, a tremendous, encyclopedic review of the subject).

Sidebar: I'm still grappling with the distinction between quantitative and qualitative research. The terminology is deployed, at least in the circles I'm in, in a way that implies an eqivalence between qualitative and inductive research, and quantitative and deductive research. I enjoy quantitative methods in the sense that I am a reasonably proficient programmer, and r and python and my laptop (and occasionally AWS) are versatile and powerful computational tools. So I can get shit done. But I'm not necessarily hell-bent on doing deductive work. After all, I'm new to the field, and the field is new (relatively speaking) to the full potential of machine methods for inductive research. Or rather, inductive research that blurs the quantitative vs. qualitative boundary. Its a new frontier, some exploration is in order.

© Anand Bhardwaj 2020

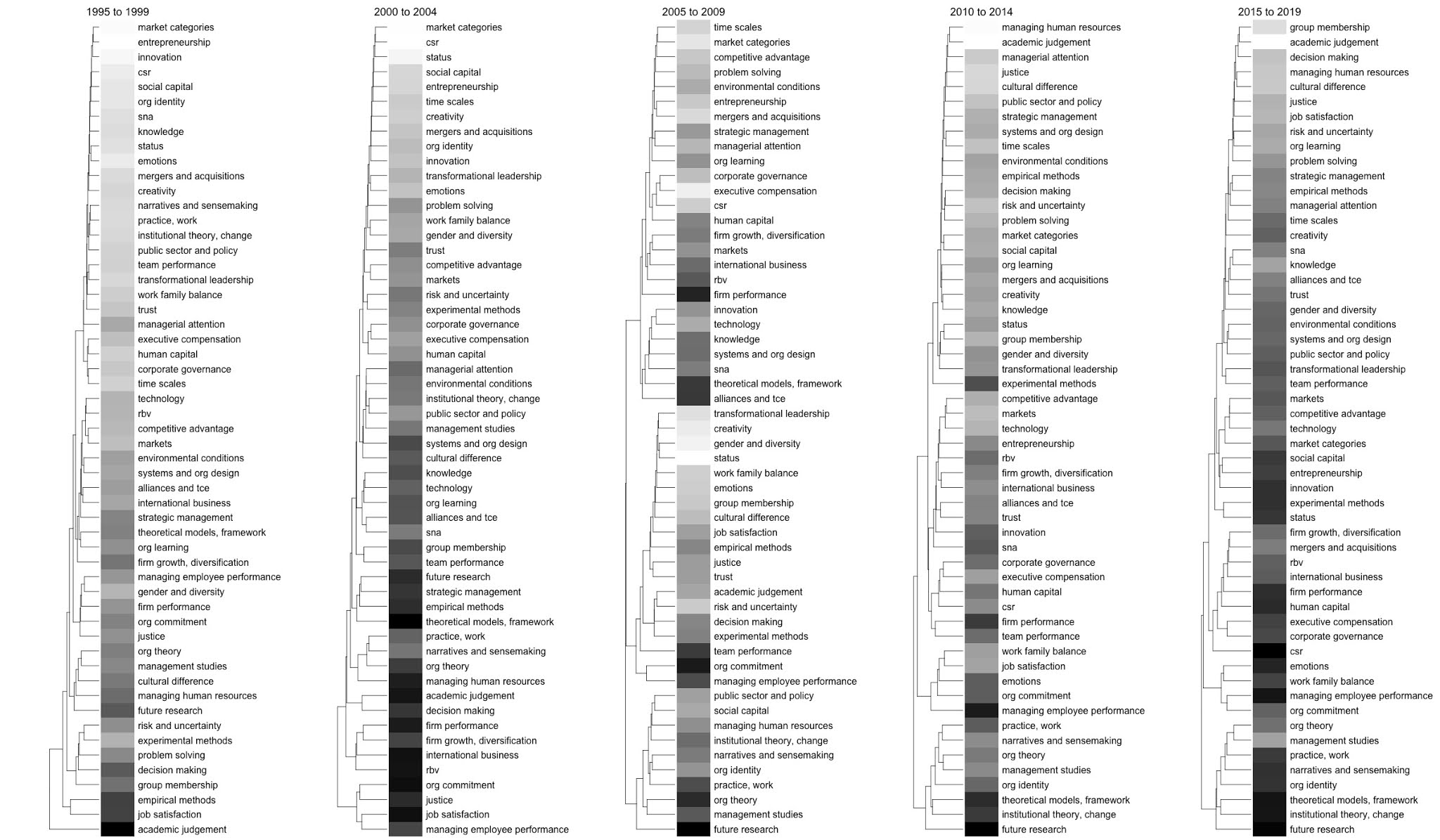

And so with something like LDA, the resulting data structure - the document topic probability matrix - is a superb base on which to do a ton of fun stuff. Each document has a consistently estimated topic vector, so then you can do things like distance measures and clustering. And if you have metadata like author and journal and publication date and exogenous events, you can analyze semantic differentiation (innovation) or conformity (isomorphism) over time, in reaction to exogenous events.

And then there are the tree-based methods. My old flame. My first love.

I have been thinking a lot about the similarity between LDA and the good old phylogenetic methods of estimation of trees with dated tips using timestamped RNA sequence data (eg., BEAST). These methods use RNA sequence space and time metadata to construct family trees of the source viruses. Known mutation rates from specfic nucleotides to specific nucleotide provides a probability structure for the estimator to create a plausible, parsimonious sequence of mutation and inheritance across time and space.

What if there were a way to tranfer the phylogenetic method's use of time to content analysis of fields?

Mechanistically what I am trying to imagine is a collaborative knowledge creation process that occurs at the level of a field. A complex interaction of knowlege creation, contestation, reflexitivity that spans papers, conferences, correspondences, lectures, zoom meetings, and the like. The fact that there are predictable patterns of semantic distance (measured as the Jensen Shannon distance between topic vectors of two abstracts) based on measures of social distance between authors is further evidence of a social/field level process. The tree structures that form when clustering topic vectors of abstracts often map to journals or communities of scholars rather neatly.

So what if we used the publication date of the paper to represent when certain arrangments of topics started to appear in the field? Maybe add some parameter that is segmented at the level of the journal that represents the average time from submission to publication, plus the time for data collection and analysis. Maybe discretize the document topic probabilties to make categorize the arrangements.

Then we use a "dated tip" approach that constructs an ancestral tree of knowlege. The process model we are simulating is some kind of Rouleauvian knowlege bricolage process that also takes into account author social distance, geographic distance, institutional history, etc. So we are in effect estimating a semantic influence tree. Trace a line from Iron Cage Revisited to Institutional Change in Toque Ville, as it were. I need to sleep on this. I can see the blurred outline of this algorithm for extending LDA.

All this to say, I think what I am trying to say with the topic modeling of management research abstracts is something hopefully interesting and significant about how knowledge is created at the level of the field. and that something hopefully interesting is a story that unfolds over time and over lineages.

This comment has been removed by a blog administrator.

ReplyDelete