Engaging with Online Discourse

Over this summer, I've had the opportunity to experiment with the Twitter API's Academic Track product. I'm going to write about that today. I've been taking a break from this blog this summer to focus on my comprehensive exams (next month!) but I've been noodling around with some thoughts on online discourse and I feel its time to put something down in words. Plus its like a palette cleanser from the endless volumes of papers I have been reading, a chance to produce, instead of consuming.

First, some context: I love discourse. Words produced by people, intended often for other people to read. I like to look at it, understand the the motivations and intent behind words, to identify structures therein. And when I say structures, I mean the socially produced (often through repeated action) rules about what constitutes acceptable conduct given a particular social context, given the constraints and affordances of the technologies surrounding that social context. A Giddensian flavor of structure, if you will. And so, ever since (or perhaps a little before) starting grad school, I've been playing with machine methods for analyzing (academic) discourse. And that has been tremendously satisfying and has certainly aided in how I make sense of the world I live in (the world of academic discourse). But I also live in a social world that exists beyond the realm of academia, and so naturally my interests extended to massive online discourse.

When I say massive online discourse, I'm referring primarily to online social media platforms like Twitter, Facebook, etc where social interactions are numerous, textual, public, and broadly accessible, but the phenomenon extends to other platforms where participation is more curated (like stackoverflow or slack, for example). These forms of discourse are, for many communities, the predominant form of social interaction, especially in today's pandemic-stricken world.

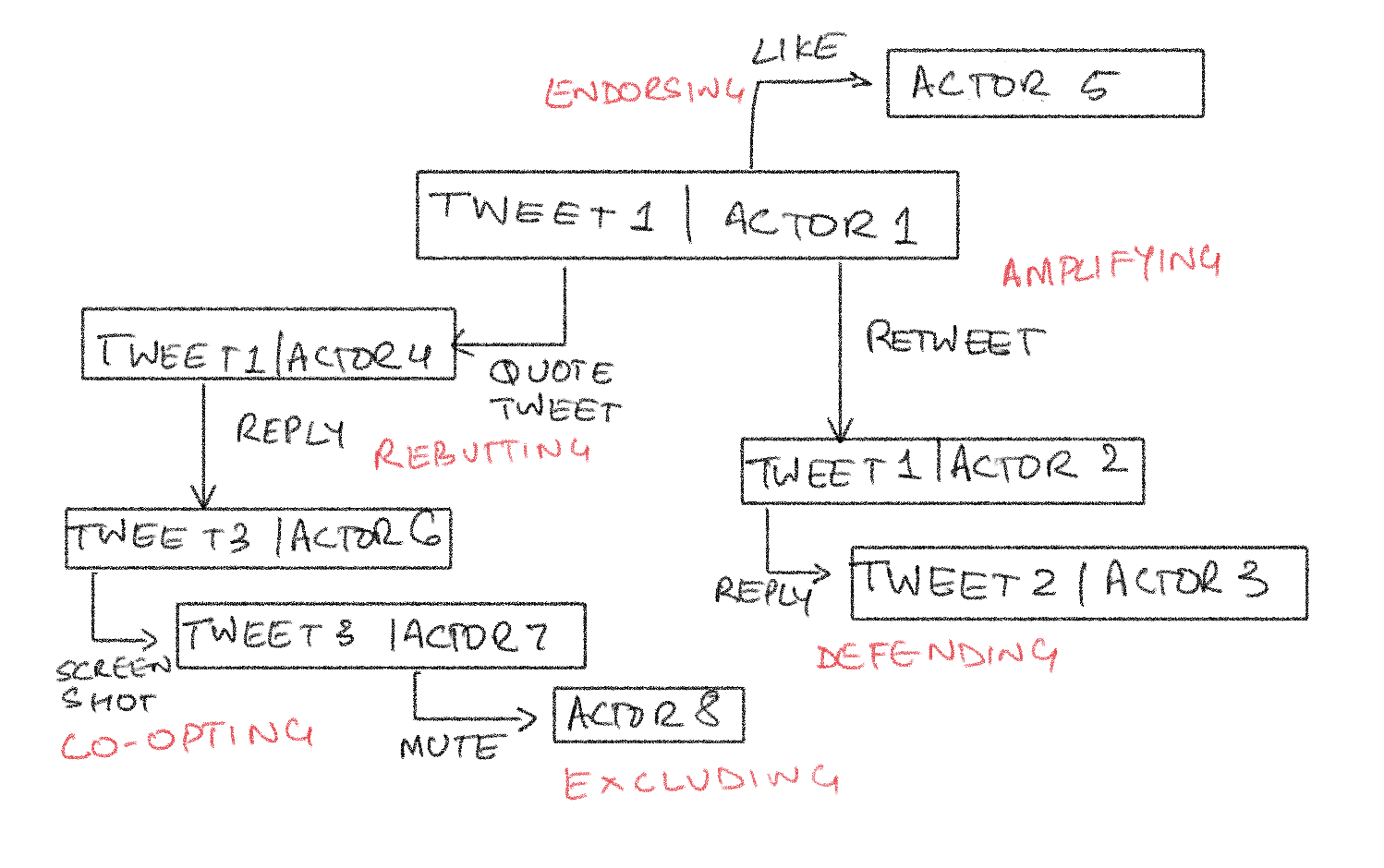

Academia is yet to catch up, I feel. What is an online conversation in this context? What are its bounds, who are the participants, and what are they doing?

So, what is an online conversation?

A tweet and its replies?

A tweet, its replies, and its retweets?

A tweet, its replies, its retweets, and the replies to the retweets?

A tweet, its replies, its retweets, the replies to the retweets, the quote tweets, and the replies to the quote tweets?

A tweet, its replies, its retweets, the replies to the retweets, the quote tweets, the replies to the quote tweets, a screen shot of the original tweet, replies to the screenshot, retweets of the screenshot, replies to the retweet of the screenshot, a post on Reddit about the screenshot, and the comments on that post?

Do we understand the phenomenon itself? I'm not sure we do. I'm not sure we have the theoretical vocabularies to truly engage with it. And that's exciting.

These are questions that have been sloshing around in my brain this summer. Very much motivated by the fact that I have access to the Twitter Archive. That's right, the Academic Track Twitter API product provides researchers with access to the entire Twitter archive, going back to 2006. Its tremendous, an endless ocean of discourse and metadata associated with that discourse- who, what, when.

I'll probably weigh in again on these matters, especially as research projects materialize from this data.

For now, here's the rundown on how to start playing with the data:

1. Go to developer.twitter.com, and get yourself an account.

2. Apply for an Academic Track research project. These applications are monitored in the sense that I know people who didn't get approve for one. I'm not sure what the approval criteria are, but having some details about your plans appears to help. Put some time and thought into research design prior to applying. This step is important. Academic Track gives you access to the full archive for research purposes- commercial versions of this sort of access are very very expensive.

3. Create an App. This is basically a set of credentials that twitter gives you that allows you to use something like R or Python to query and pull data from Twitter and produce analysis datasets. Each app is associated with rate limits (so you can only request a certain amount of data per unit of time).

4. Start exploring! Personally, I use R and a package called academictwitteR to get my data. This works for me because I'm predominantly interested in the text of tweets and this package allows you to quickly structure the data into tables, which is my preferred way of engaging. You can also store tweets as JSON files and reconstitute them into tables later.

Some basics:

# Install and load required packages

library(academictwitteR) #for pulling tweets

library(dplyr) #for data wrangling

## Enter credentials from your app

bearer_token<-""

# PRIMARY DATA SET: Pull tweets using a complex query

tweets.raw <- get_all_tweets(

query="#keyword OR keyword lang:en",

start_tweets="2006-06-01T00:00:00Z",

end_tweets="2021-06-30T23:59:59Z",

n= Inf, #as many as possible.

page_n=500,context_annotations=F,bind_tweet=T, bearer_token) #data settings

Go look at the syntax for query construction. There's a lot you can do with the core query itself using operators like OR, AND (just a space), parentheses, NOT (a "-"), and the various entities that twitter allows you to use, like timestamps, user IDs, tweet IDs, hashtags, etc.

Once you have a set of tweets based on the content of the tweets, you can do things like bring in information about the users.

#some basic information on users

users.raw<-get_user_profile(unique(tweets.raw$author_id),bearer_token)

#assemble a flatfile

tweets.text<-merge(tweets.raw[,c("text","id","created_at","author_id","conversation_id")],

users.raw[,c("username","name","id")],

by.x="author_id",by.y="id",no.dups=F)[,c("text","id","created_at","username","name","author_id","conversation_id")]

All this is barely scratching the surface. The important thing is knowing what to do with the data. How does one make sense of it? These are questions I alluded to at the top of this post.

The "technical" stuff is trivial, I think. And by that I'm referring to the technologies required to assemble and engage with the data. I'm not a big fan of blackboxed online discourse analysis software, because of the way they "force" a particular way of engaging with the data. They are in effect producing a particular world in which we the researcher have very little agency, as the agential realists would say. So my goal here is to understand and develop theoretical vocabularies and tools to engage with the data, and provide technological tools to execute on those theoretical tools, not the other way around as is unfortunately often the case.

Comments

Post a Comment